Hire Certified Data Engineers to Power Your Data Pipelines

Need to manage, transform, or scale your data infrastructure?

Hire data engineers from eDev in as little as 7 days. Our certified data engineers specialize in building robust data pipelines, integrating sources, and optimizing your data architecture for analytics and AI applications.

Hire A Top Data Engineer!

Work with these top data engineers from India and Latin America.

Our skilled data engineers bring innovation, expertise, and a commitment to excellence to every task.

$25/hr

Yashwanth ( 5+ Years )

Software developer with 5+ years of experience in Power BI and SQL Server. Skilled in building robust solutions and delivering insights that drive business growth.

Key Skills:

Power BI, SQL, SQL Server, Snowflake

$25/hr

Irfan ( 4+ Years )

Power BI and SQL Server expert with 4+ years of experience creating dynamic dashboards from large datasets. Proficient in ETL, DAX, and automating data refresh with PowerShell and Task Scheduler.

Key Skills:

Power BI, SQL Server, SSRS, Power BI reporting

$40/hr

Varun ( 7+ Years )

Seasoned Data Scientist with 7 years of experience in advanced analytics, machine learning, time series forecasting, Python, SQL, and MLOps.

Key Skills:

Linux (Alpine), AWS, Python, Shell scripting

$40/hr

Sarath ( 6 Years )

Senior Machine Learning Engineer with 6 years of experience in MLOPs, AI, Data Science and computer vision.

Key Skills:

Python, Machine learning, Computer vision, NLP, LLMs

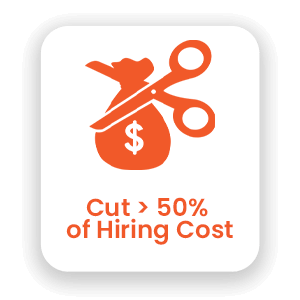

Why Smart Brands Choose eDev to Hire Data Engineers

How to Hire Data Engineers

Tell us your requirements

Get a list of matched candidates

Shortlist candidate profiles

Participate in the vetting process if interested

Onboard selected candidates within a day

Begin working with the candidate

Tell us your requirements

Get a list of matched candidates

Shortlist candidate profiles

Participate in the vetting process if interested

Onboard selected candidates within a day

Begin working with the candidate

Scale your team up or down based on the progress of your project and the performance of the Data Engineer.

Explore More Roles / Skills

Frequently asked Questions

How to hire a Data Engineer?

To hire a data engineer through eDev, simply share your project requirements. We’ll match you with vetted candidates, help schedule interviews, and onboard your preferred data engineer in days—not weeks.

What does a data engineer do?

A data engineer designs, builds, and maintains systems that gather, store, and process raw data. They create data pipelines, optimize storage, and ensure clean, usable datasets for data scientists and analysts.

How can eDev help me hire skilled data engineers?

eDev connects you with pre-vetted, certified data engineers from global tech hubs. We streamline hiring with personalized matches, flexible contracts, and support throughout the engagement.

What technical skills should a data engineer have?

A strong data engineer should have experience with SQL, Python or Scala, ETL tools, data modeling, and big data technologies like Hadoop or Spark. Familiarity with cloud platforms like AWS, GCP, or Azure is also valuable.

Why should I hire a dedicated data engineer?

Hiring a dedicated data engineer ensures you have focused, consistent support to manage growing data needs. They can build reliable infrastructure that scales with your product and enhances decision-making.

How quickly can I hire a data engineer through eDev?

You can hire a skilled data engineer in as little as 7 days with eDev’s fast-track recruitment process.

What industries does eDev serve with data engineering talent?

Our data engineers have experience across industries including fintech, healthcare, e-commerce, logistics, SaaS, and more.

How do I evaluate the right data engineer for my project?

eDev provides detailed profiles, including experience, tech stack, and project fit. You can also conduct interviews and technical assessments to ensure alignment with your goals.

Is there a minimum contract duration to hire a data engineer?

We offer flexible engagement models. No upfront contracts or long term commitments. While short-term contracts are possible, most clients prefer atleast 3 to 6 months for sustained data engineering impact.

Can I scale my data engineering team with eDev?

Yes. You can start with one certified data engineer and scale up or down based on project needs and growth.

Do your data engineers have experience with big data platforms?

Absolutely. Our engineers are well-versed in big data platforms like Hadoop, Spark, and distributed cloud-based environments.